Dune Analytics: Freeing Bandwidth & Money to Level Up

Before Dune Analytics set it off in 2018, there was no easy way to answer any arbitrary questions about Ethereum activity with data, or look back to see how that data moved over time. Sure, there were a few sites that reported some specific metrics. But not with transparency and the flexibility to interact with the metrics and data provided. In that framework, it's hard to benchmark metrics across different dApps in a meaningful way. Without the right data, community discussions and product decisions are often based on apples & oranges comparisons, or made without ever considering data.

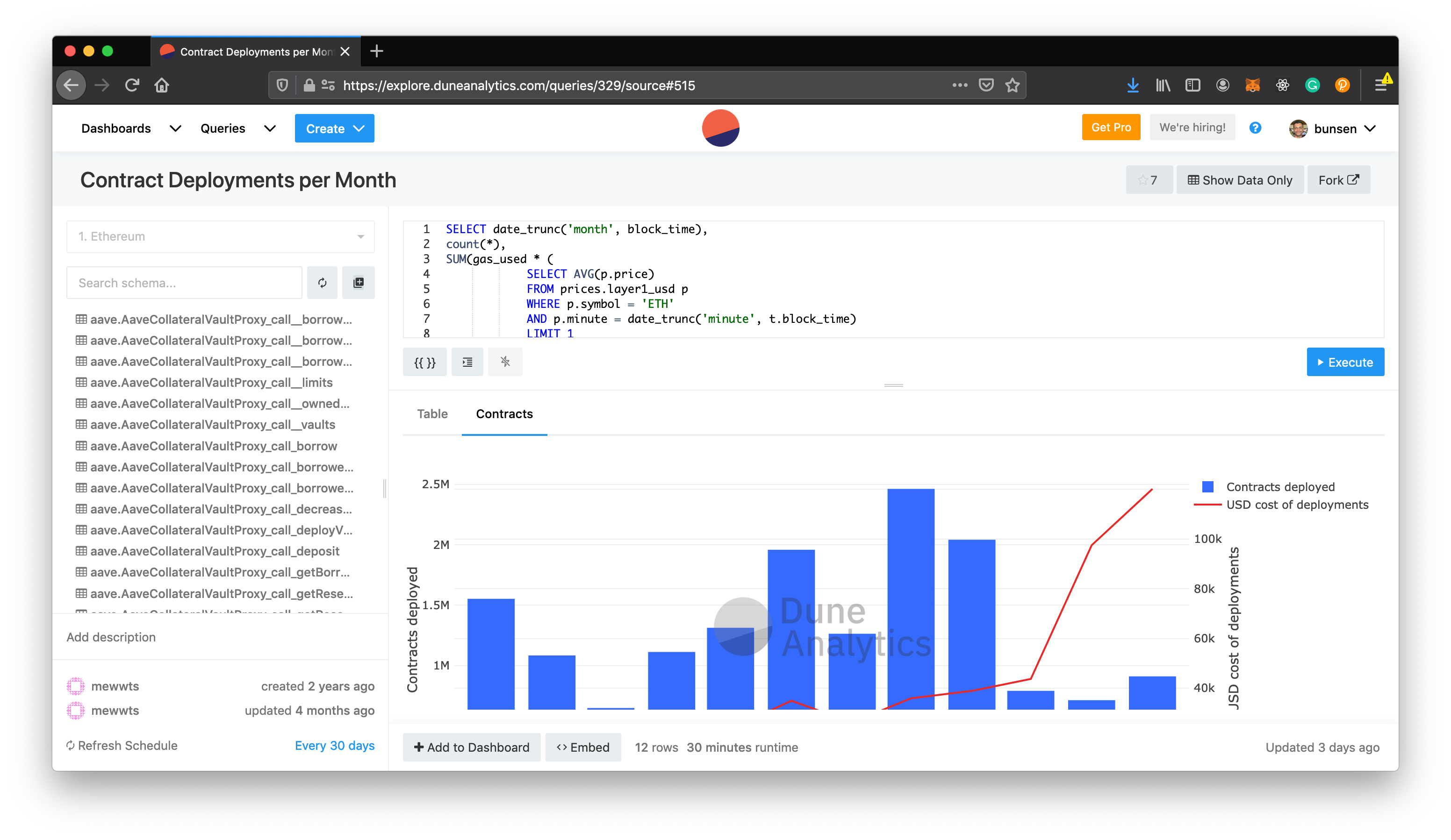

Dune Analytics offers the Ethereum community Ethereum data with SQL, where you can visualize the results and share it with the world in a matter of minutes. You can create your own dashboard full of beautiful visual graphs within a plug-and-play experience. Dune Analytics provides all the smart contract events, contract calls, and Ethereum transactions in real-time. They also have token/USD prices you can easily join with to get USD volumes of on-chain activity.

Beyond the data and tooling, it's easy to explore all the other great analyses that fellow community members have created with Dune. If you get inspired you can simply fork the query with a click to make it your own. Wondering what is actually going on is now a thing of the past.

- QuickNode API for OP

- QuickNode API for ARB

- QuickNode API for BTC

- QuickNode API for ETH

- QuickNode API for BASE

- QuickNode API for FTM

- QuickNode API for MATIC

- QuickNode API for AVAX

- QuickNode API for BSC

- QuickNode API for XDAI

- QuickNode API for SOL

- Archive Mode

- Trace Mode

- Address Balance Index

- Token API

- NFT Fetch Tool

- Fetch NFTs By Creator